Environment Overview

Introduction

Learn-to-Race (L2R) is an OpenAI-gym compliant, multimodal control environment, where agents learn how to race.

Unlike many simplistic learning environments, ours is built around high-fidelity simulators, based on Unreal Engine 4, such as the Arrival Autonomous Racing Simulator—featuring full software-in-the-loop (SIL) and even hardware-in-the-loop (HIL) simulation capabilities. This simulator has played a key role in bringing autonomous racing technology to real life in the Roborace series, the world’s first extreme competition of teams developing self-driving AI. The L2R framework is the official training environment for Carnegie Mellon University’s Roborace team, the first North American team to join the international challenge.

Autonomous Racing poses a significant challenge for artificial intelligence, where agents must make accurate and high-risk control decisions in real-time, while operating autonomous systems near their physical limits. The L2R framework presents objective-centric tasks, rather than providing abstract rewards, and provides numerous quantitative metrics to measure the racing performance and trajectory quality of various agents.

As in the real-world, the Arrival Autonomous Racing Simulator is not time-invariant. In order to generate deployable solutions, latencies that result from model inference and from algorithm optimisation must be considered in the design of control policies. For example (model inference latency), whereas using large-capacity visual processing backbones may be desirable from a representation learning perspective, these large processing pipelines can induce significant inference latency for real-time applications. As another example (algorithm optimisation latency), the optimisation of learning-based approaches (e.g., reinforcement learning algorithms, with neural function approximators) often involves performing gradient steps, which, if performed in the middle of an episode, can also introduce latency. Latencies that go unaddressed could interfere with the control algorithm’s ability to send quick control commands and, thus, could cause the vehicle to drive outside the admissible area or to engage in unsafe behaviour on the road. Both of these situations pose fascinating and exciting challenges for autonomous racing research. We hope to encourage work on these and other interesting problems, through the use of the Learn-to-Race framework.

For more information, please read a couple of our conference papers:

Baseline Models

We provide three baseline models, to demonstrate how to use L2R: a random agent, a model predictive control (MPC) agent, a Soft Actor-Critic reinforcement learning (RL) agent, and an imitation learning agent based on the MPC’s demonstrations.

The RandomAgent executes actions, completely at random. The MPCAgent recursively plans trajectories according to a dynamics model of the vehicle, then executes actions according to the current plan. The SACAgent is a learning-based method, which relies on the optimisation of a stochastic policy, model-free.

Action Space

In the Learn-to-Race tasks, agents execute actions in the environment, according to steering and acceleration control, each supported by the simulator on a continuous range from -1.0 to 1.0.

Action |

Type |

Range |

|---|---|---|

Steering |

Continuous |

[-1.0, 1.0] |

Acceleration |

Continuous |

[-1.0, 1.0] |

To provide additional flexibility for learning-based approaches, the L2R framework supports a scaled action space of [-1.0, 1.0] for steering control and [-16.0, 6.0] for acceleration control, by default. You can modify the boundaries of the action space by changing the parameters for env_kwargs.action_if_kwargs in params-env.yaml.

Negative acceleration commands perform braking actions, until the vehicle is stationary. If negative acceleration commands continue after the vehicle is stationary, the vehicle will reverse.

While you can change the gear, in practice we suggest forcing the agent to stay in drive since the others would not be advantageous in completing the tasks we present (we don’t include it as a part of the action space). Note that negative acceleration values will brake the vehicle.

Observation Space

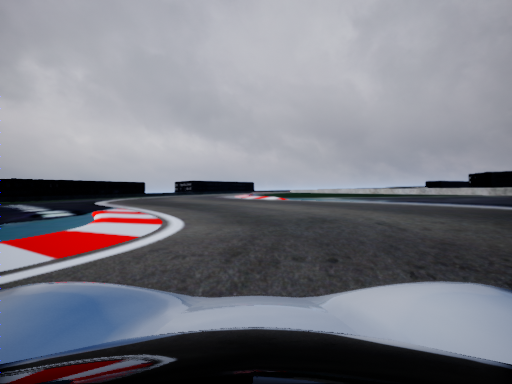

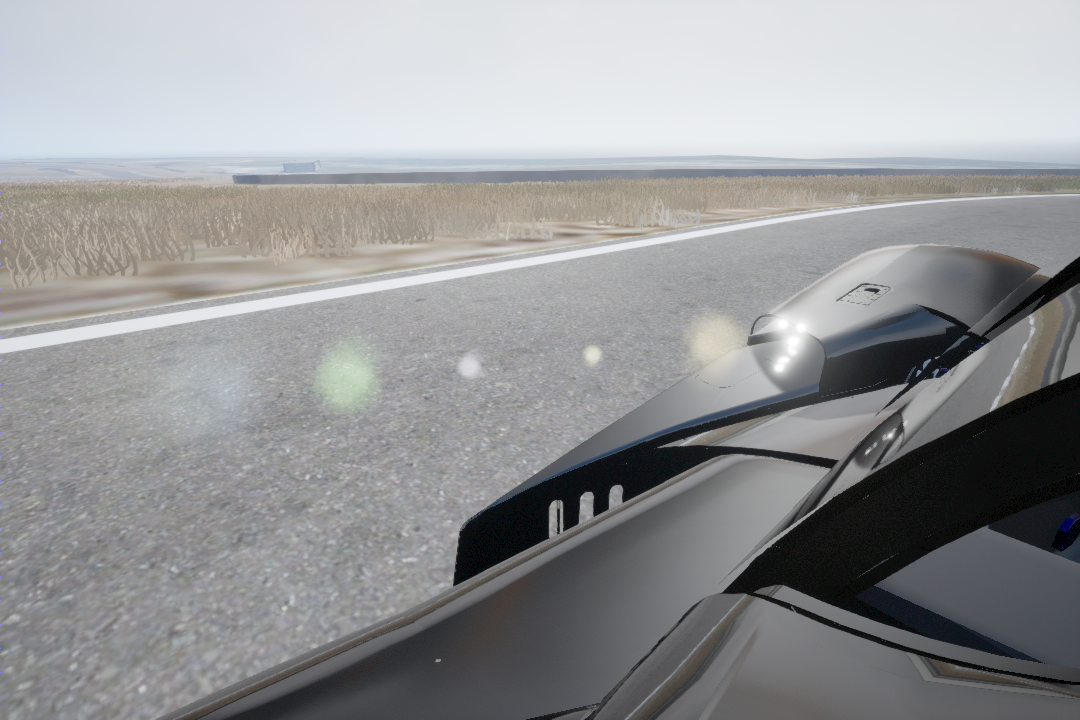

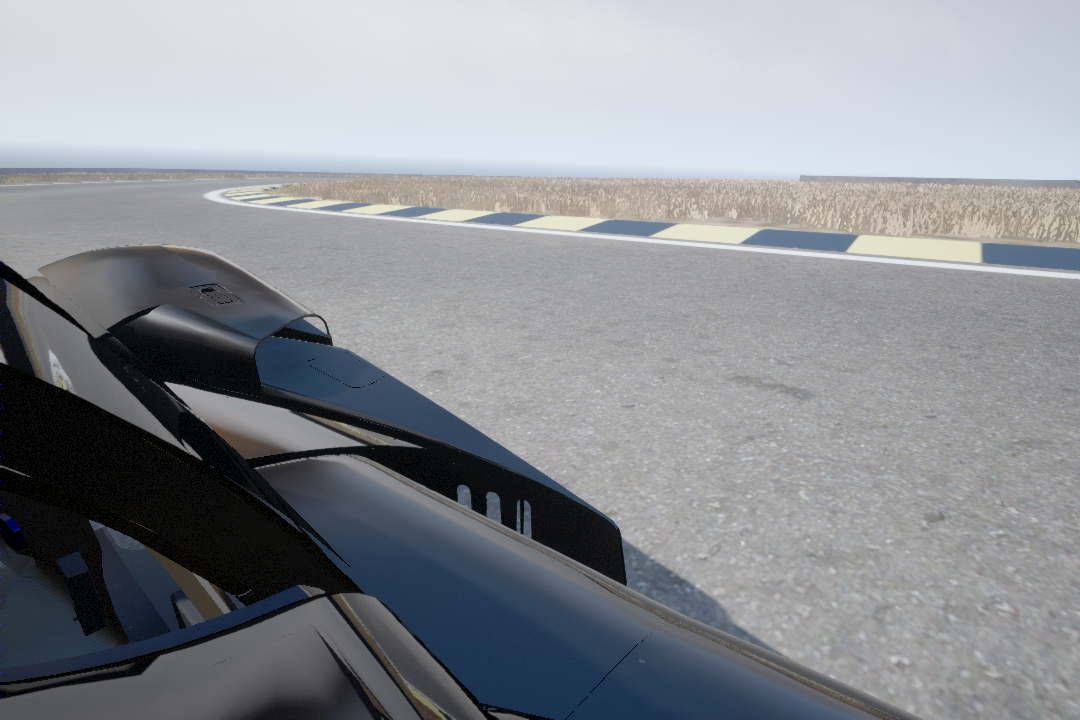

We offer two high-level settings for the observation space: vision-only and multimodal. In both, the agent receives RGB images from the vehicle’s front-facing camera, examples below. In the latter, the environment also provides sensor data, including pose data from the vehicle’s IMU sensor.

Customizable Sensor Configurations

One of the key features of this environment is the ability to create arbitrary configurations of vehicle sensors. This provides users a rich sandbox for multimodal, learning based approaches. The following sensors are supported and can be placed, if applicable, at any location relative to the vehicle:

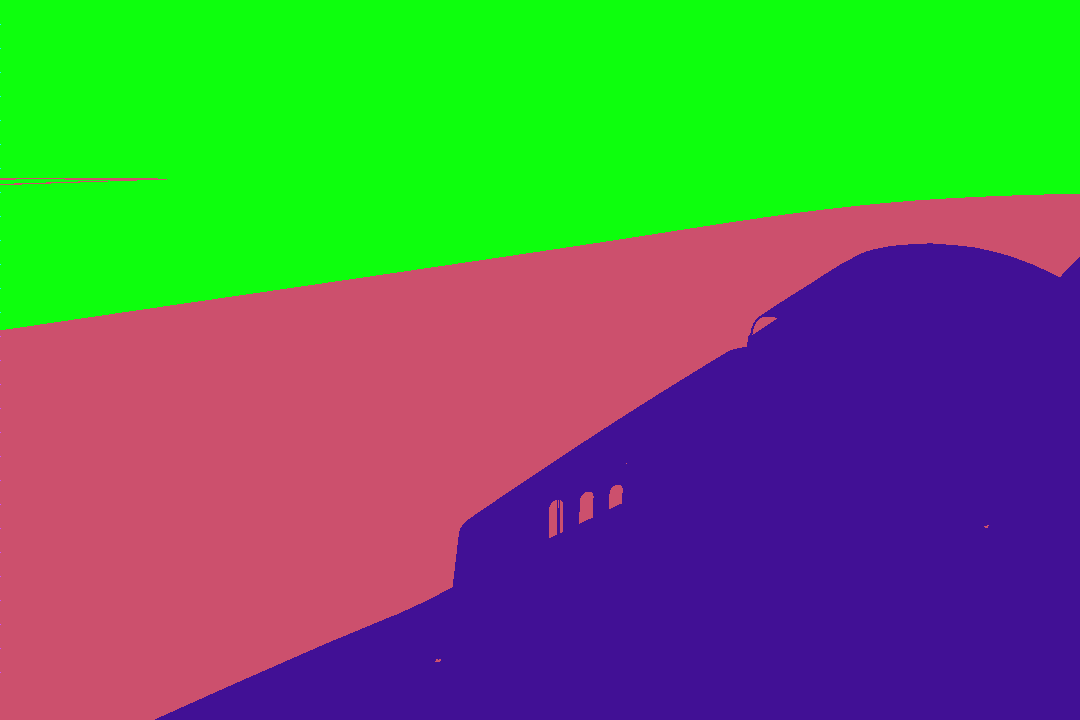

RGB cameras

Depth cameras

Ground truth segmentation cameras

Fisheye cameras

Ray trace LiDARs

Depth 2D LiDARs

Radars

Additionally, these sensors are parameterized and can be customized further; for example, cameras have modifiable image size, field-of-view, and exposure. Default sensor configurations are provided in env_kwargs.cameras and sim_kwargs in params-env.yaml. We provide further description on sensor configuration

You can create cameras anywhere relative to the vehicle, allowing unique points-of-view such as a birdseye perspective which we include in the vehicle configuration file.

For more information, see Creating Custom Sensor Configurations

Whereas we encourage the use of all sensors for training and experimentation, only the CameraFrontRGB camera will be used for official L2R task evaluation, e.g., in our Learn-to-Race Autonomous Racing Virtual Challenges.

Interfaces and configuration

The environment interacts with additional modules in the overall L2R framework, such as the racetrack mapping (for loading and configuring the world), the Controller (which interfaces with an underlying simulator or vehicle stack) and the Tracker (which tracks the vehicle state and measures progress along the racetrack).

Whereas each of these interfaces can be further configured from params-env.yaml, the default values provided will be used for official L2R task evaluation, e.g., in our Learn-to-Race Autonomous Racing Virtual Challenges.

Tracker (l2r/core/tracker.py), configured via env_kwargs in configs/params-env.yaml

Controller (l2r/core/controller.py), configured via env_kwargs.controller_kwargs in configs/params-env.yaml

racetrack (l2r/racetracks/mapping.py), configured via sim_kwargs in params-env.yaml

Racetracks

We currently support two racetracks in our environment, both of which emulate real-world tracks. The first is the Thruxton Circuit, modeled off the track at the Thruxton Motorsport Centre in the United Kingdom. The second is the Anglessey National Circuit, located in Ty Croes, Anglesey, Wales.

Additional tracks are used for evaluation, e.g., in open Learn-to-Race Autonomous Racing Virtual Challenges, such as the Vegas North Road track, located at Las Vegas Motor Speedway in the United States.

We will continue to add more racetracks in the future, for both training an evaluation.

Research Citation

Please cite this work if you use L2R as a part of your research.

@inproceedings{herman2021learn,

title={Learn-to-Race: A Multimodal Control Environment for Autonomous Racing},

author={Herman, James and Francis, Jonathan and Ganju, Siddha and Chen, Bingqing and Koul, Anirudh and Gupta, Abhinav and Skabelkin, Alexey and Zhukov, Ivan and Kumskoy, Max and Nyberg, Eric},

booktitle={Proceedings of the IEEE/CVF International Conference on Computer Vision},

pages={9793--9802},

year={2021}

}